Quick: What’s the most powerful photo you’ve seen of the Russia-Ukraine war? Can you recall any memorable photos from the war? If somebody posted a photo of the war to one of your social-media feeds — a soldier’s corpse in a field, a centuries-old building in ruins, a displaced family in tears — would you be moved by it? Would you trust it? Newsroom budgets for war photography have eroded in recent years, giving us fewer such images. But the more profound change might be found in how the documentarian and social utility of photojournalism has eroded, as images are Photoshopped, morphed, repeated, and politicized in ways that make even outsize tragedies feel mundane.

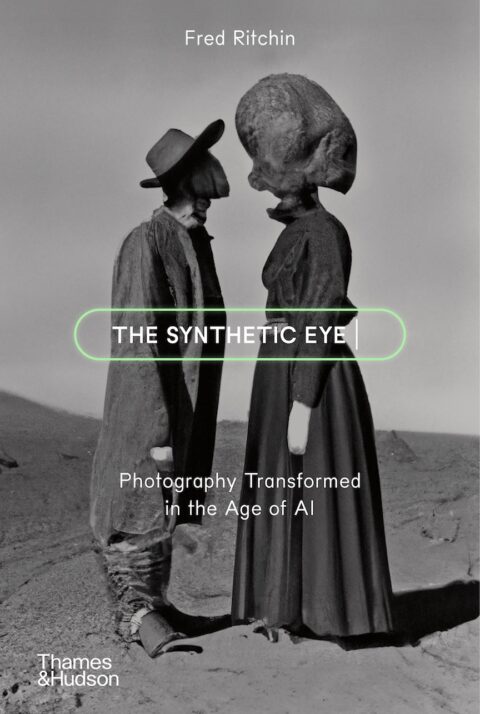

Furthermore, generative AI, with its capacity to radically rework or even wholly invent images, slams the nail in the coffin of any claim photography might make toward truth today. As veteran photo critic and former New York Times Magazine photo editor Fred Ritchin puts it in The Synthetic Eye, his book on this shift and its consequences: “The photograph’s evidentiary, dialectical relationship with the visible and the real has been largely displaced by manipulated and synthetic imagery of the world as one wants it to be.”

Furthermore, generative AI, with its capacity to radically rework or even wholly invent images, slams the nail in the coffin of any claim photography might make toward truth today. As veteran photo critic and former New York Times Magazine photo editor Fred Ritchin puts it in The Synthetic Eye, his book on this shift and its consequences: “The photograph’s evidentiary, dialectical relationship with the visible and the real has been largely displaced by manipulated and synthetic imagery of the world as one wants it to be.”

Ritchin is understandably disappointed in this state of affairs, even though he saw it coming — he writes of how even as early as the 1990s, when Time magazine’s darkening of an image of O. J. Simpson constituted a scandal in the field, he was warning that computer-facilitated tweaks to images would sow distrust about what we’re looking at. Now, most people with a smartphone can do what Time did and much more — brightening skies, removing people or other intrusive presences to create images that are, Ritchin writes, “increasingly constructed according to consumerist fantasies of beauty and happiness.”

Yet Ritchin’s goal with The Synthetic Eye leans toward exploration of the new possibilities AI presents for photography rather than bemoaning what it closes off. The book includes substantial portfolios of the results of his queries to DALL-E, DreamStudio, and other AI tools, and some of them are delightful and provocative, art in themselves. Prompted to produce “a photograph of the greatest mothers in the world,” it delivers a baboon cradling an infant; “a photograph of the perfect family” is a faux sepia image of two stone-faced older men (one wearing a comically small hat, jauntily askew) behind two young girls in Sunday-best dresses; “a Pictorialist photograph of what one first sees after one’s own death” is a somber abstraction that looks like layered sheets of paper, or clouded glass, or petals.

But because generative AI can work only within the confines of its algorithm, the databases of images it refers to, and (most importantly) the sensibilities that defined the photographs, the images are often absurdly warped, distant from their intended meanings. Ritchin asked DreamStudio to remake images of the March on Washington depending on who was at the microphone. Bob Dylan was surrounded by a sea of white people; Kamala Harris by nothing but black people; Elvis Presley by a diverse crowd, though the singer himself “was made to resemble a billboard cut-out.”

[“A photograph of the perfect family” / This is a synthetic image, not a photograph, generated by DreamStudio in response to the Fred Ritchin’s text prompt “A photograph of the perfect family” (August 2023)]

And the prompts themselves can turn reality inside out, troublingly so. “A photograph of happy people in the Warsaw Ghetto during World War II” is bad not because the people in the photo are smiling — surely, even in that moment of brutality and oppression, people retained some modest capacity for that. But the image suggests that the ghetto was an inherently cheerful place, an idea that can only serve vile purposes. Images still have the capacity to deliver a moral position, but “consumerist fantasies of beauty and happiness” run the risk of promoting ideas that send people in the path of a bullet, or under a tank tread.

Given that, what makes an image meaningful, effective, and moral? Is it even possible now? Ritchin suggests that the last photograph to do that is of Alan Kurdi, a young Syrian boy who drowned trying to escape the war-torn country and was photographed dead, face down, washed up on a Turkish beach. Its image of lost innocence resonated with anybody who looked; there, “the firewall between people had been allowed to dissolve,” Ritchin writes. But while the image inspired a spike in donations to humanitarian causes, it did little to galvanize an effort to stop the war, as photojournalism had in Vietnam. (After all, there’s a reason the U.S. military severely proscribed photography in the years since; the DOD’s “hearts and minds” campaign targeted Peoria as much as Hanoi.)

[This is a synthetic image, not a photograph, generated by DALL·E in response to Ritchin’s text prompt “A photograph of the greatest mothers in the world” (October 2022)]

That ineffectiveness is why people protest the idea that showing images of school shootings would somehow move the needle on gun control. As one mother quoted in the book says, “Our country’s problems with guns will not be fixed with images of dead children” –we’re too desensitized and skeptical now. Ritchin points to efforts to use AI to put an image around brutality that clarifies the harm but doesn’t exploit victims: A project developed by an Australian law firm called “Exhibit A-i: The Refugee Account” uses imagined images of abuse of refugees. It is imperfect — and still risks retraumatizing the victims whose accounts fed the algorithm — but potent.

The persistent issue, though, is that such efforts reflect what has become an outdated notion — that we can have one image, one perfect shot, that might deliver capital-T truth and provoke action. Today we swim in an ocean of images, a sheen of well-framed, meme-ready photography, with countless ways to “improve” or ignore what we see. As a solution, Ritchin points in two directions. One is to be more deliberate about providing contexts around images. He points to his own concept of Four Corners, where an image online provides information about an image’s backstory, authorship, links, and related imagery. It’s a start — if people have the patience to engage with it — but demands extra work on the photographer’s part. And even then, there may be cultural blind spots; part of the reason AI delivers so much inaccurate, slop-like imagery is because photography is often done by people who are socially and culturally distant from what they’re shooting. Robert Capa’s command that “if your photos aren’t good enough, you’re not close enough” is true enough, but who can truly get close enough?

[This is a synthetic image, not a photograph, generated by DreamStudio in response to Ritchin’s text prompt “A photograph of the Salem witches on trial” (August 2023)]

Another option is to get out of the documentarian game entirely, to “investigate other ways of retaining [images’] power,” as Ritchin writes. One example of this is Anton Kusters’ “The Blue Skies Project,” in which he shot Polaroids of the skies above every Nazi concentration camp in Europe. It acknowledges the way direct photos of the Holocaust can become numbing over time; instead of pointing toward brutality and death, the work underscores life and endurance. Its inherent recontextualization acknowledges the failures of conventional photojournalism and blunts the power of AI, which can only draw on the past, not invent a future. It, too, is imperfect, but imperfection should be a higher goal for photography now. A photograph can no longer ask us simply to see. We’ll have to imagine.